Extreme Workflow Composer HA Cluster - BETA¶

Extreme Workflow Composer (EWC) is the commercial version of the StackStorm automation platform. EWC adds priority support, advanced features such as fine-tuned access control, LDAP, and Workflow Designer. To learn more about Extreme Workflow Composer, get an evaluation license, or request a quote, visit stackstorm.com/#product.

This document provides an installation blueprint for a Highly Availabile StackStorm Enterprise (Extreme Workflow Composer) cluster based on Kubernetes, a container orchestration platform at planet scale.

The cluster deploys a minimum of 2 replicas for each component of StackStorm microservices for redundancy and reliability,

as well as configures backends like MongoDB HA Replicaset, RabbitMQ HA and etcd cluster that st2 relies on for database,

communication bus, and distributed coordination respectively. That raises a fleet of more than 30 pods total.

The source code for K8s resource templates is available as a GitHub repo: StackStorm/stackstorm-enterprise-ha.

Warning

Beta quality! As this deployment method available in beta version, documentation and code may be substantially modified and refactored.

Requirements¶

- Kubernetes cluster

- Helm, the K8s package manager and Tiller

- Enough computing resources for production use, respecting System Requirements

Usage¶

This document assumes some basic knowledge of Kubernetes and Helm. Please refer to K8s and Helm documentation if you find any difficulties using these tools.

However, here are some minimal instructions to get started.

Deployment¶

StackStorm Enterprise HA cluster available as a Helm chart, a bundled K8s package which makes installing complex StackStorm infrastructure easy as:

# Add Helm StackStorm repository

helm repo add stackstorm https://helm.stackstorm.com/

# Replace `<EWC_LICENSE_KEY>` with a real license key, obtained in Email

helm install --set secrets.st2.license=<EWC_LICENSE_KEY> stackstorm/stackstorm-enterprise-ha

Note

Don’t have StackStorm Enterprise License?

Request a 90-day free trial at https://stackstorm.com/#product

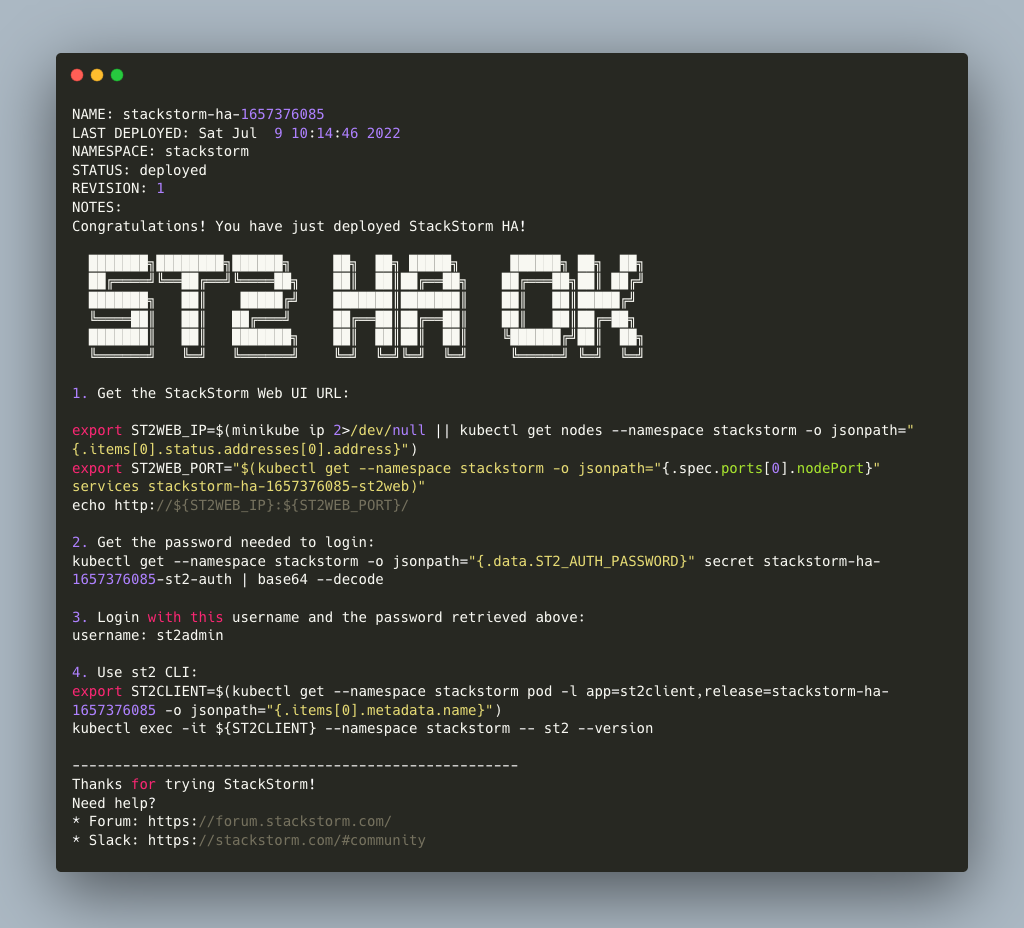

Once the deployment is finished, it’ll show you first steps how to start working with the new cluster via WebUI or st2 client:

The installation uses some unsafe defaults which we recommend you change thoughtfully for production use via Helm values.yaml.

Helm values.yaml¶

Helm package stackstorm-enterprise-ha comes with default settings in values.yaml.

Fine-tune them to achieve desired configuration for the StackStorm Enterprise HA K8s cluster.

You can configure:

- number of replicas for each component

- st2 auth secrets

- st2.conf settings

- RBAC roles, assignments and mappings

- custom st2 packs and its configs

- st2web SSL certificate

- SSH private key

- K8s resources and settings to control pod/deployment placement

- configuration for Mongo, RabbitMQ clusters

- configuration for in-cluster Docker registry

Warning

It’s highly recommended to set your own secrets as file contains unsafe defaults like self-signed SSL certificates, SSH keys, StackStorm access credentials and MongoDB/RabbitMQ passwords!

Upgrading¶

Once you make any changes to Helm values, upgrade the cluster:

helm repo update

helm upgrade <release-name> stackstorm/stackstorm-enterprise-ha

It will redeploy components which were affected by the change, taking care to keep the desired number of replicas to sustain every service alive during the rolling upgrade.

Tips & Tricks¶

Save custom Helm values you want to override in a separate file, upgrade the cluster:

helm upgrade -f custom_values.yaml <release-name> stackstorm/stackstorm-enterprise-ha

Get all logs for entire StackStorm cluster with dependent services for Helm release:

kubectl logs -l release=<release-name>

Grab all logs only for stackstorm backend services, excluding st2web and DB/MQ/etcd:

kubectl logs -l release=<release-name>,tier=backend

Custom st2 packs¶

To follow the stateless model, shipping custom st2 packs is now part of the deployment process.

It means that st2 pack install won’t work in a distributed environment and you have to bundle all the

required packs into a Docker image that you can codify, version, package and distribute in a repeatable way.

The responsibility of such Docker image is to hold pack content and their virtualenvs.

So custom st2 pack docker image you have to build is essentially a couple read-only directories that

are shared with the corresponding st2 services in the cluster.

For your convenience, we created a new st2-pack-install <pack1> <pack2> <pack3> command

that’ll help to install custom packs during the Docker build process without relying on DB and MQ connection.

Helm chart brings helpers to simplify this experience like stackstorm/st2pack:builder Docker image and private Docker registry you can optionally enable in Helm values.yaml to easily push/pull your custom packs within the cluster.

For more detailed instructions see StackStorm/stackstorm-enterprise-ha#Installing packs in the cluster.

Note

There is an alternative approach, - sharing pack content via read-write-many NFS (Network File System) as High Availability Deployment recommends. As beta is in progress and both methods have their pros and cons, we’d like to hear your feedback and which way would work better for you.

Components¶

For HA reasons, by default and at a minimum StackStorm K8s cluster deploys more than 30 pods in total.

This section describes their role and deployment specifics.

st2client¶

A helper container to switch into and run st2 CLI commands against the deployed StackStorm Enterprise cluster. All resources like credentials, configs, RBAC, packs, keys and secrets are shared with this container.

# obtain st2client pod name

ST2CLIENT=$(kubectl get pod -l app=st2client,support=enterprise -o jsonpath="{.items[0].metadata.name}")

# run a single st2 client command

kubectl exec -it ${ST2CLIENT} -- st2 --version

# switch into a container shell and use st2 CLI

kubectl exec -it ${ST2CLIENT} /bin/bash

st2web¶

st2web is a StackStorm Web UI admin dashboard. By default, st2web K8s config includes a Pod Deployment and a Service.

2 replicas (configurable) of st2web serve the web app and proxy requests to st2auth, st2api, st2stream.

Note

K8s Service uses only NodePort at the moment, so installing this chart will not provision a K8s resource of type LoadBalancer or Ingress (#6). Depending on your Kubernetes cluster setup you may need to add additional configuration to access the Web UI service or expose it to public net.

st2auth¶

All authentication is managed by st2auth service.

K8s configuration includes a Pod Deployment backed by 2 replicas by default and Service of type ClusterIP listening on port 9100.

Multiple st2auth processes can be behind a load balancer in an active-active configuration and you can increase number of replicas per your discretion.

st2api¶

Service hosts the REST API endpoints that serve requests from WebUI, CLI, ChatOps and other st2 components.

K8s configuration consists of Pod Deployment with 2 default replicas for HA and ClusterIP Service accepting HTTP requests on port 9101.

Being one of the most important StackStorm services with a lot of logic involved,

it’s recommended to increase number of replicas to distribute the load if you’d plan increased processing environment.

st2stream¶

StackStorm st2stream - exposes a server-sent event stream, used by the clients like WebUI and ChatOps to receive updates from the st2stream server.

Similar to st2auth and st2api, st2stream K8s configuration includes Pod Deployment with 2 replicas for HA (can be increased in values.yaml)

and ClusterIP Service listening on port 9102.

st2rulesengine¶

st2rulesengine evaluates rules when it sees new triggers and decides if new action execution should be requested.

K8s config includes Pod Deployment with 2 (configurable) replicas by default for HA.

st2timersengine¶

st2timersengine is responsible for scheduling all user specified timers aka st2 cron. Only a single replica is created via K8s Deployment as timersengine can’t work in active-active mode at the moment (multiple timers will produce duplicated events) and it relies on K8s failover/reschedule capabilities to address cases of process failure.

st2workflowengine¶

st2workflowengine drives the execution of orquesta workflows and actually schedules actions to run by another component st2actionrunner.

Multiple st2workflowengine processes can run in active-active mode and so minimum 2 K8s Deployment replicas are created by default.

All the workflow engine processes will share the load and pick up more work if one or more of the processes become available.

Note

As Mistral is going to be deprecated and removed from StackStorm platform soon, Helm chart relies only on Orquesta st2workflowengine as a new native workflow engine.

st2notifier¶

Multiple st2notifier processes can run in active-active mode, using connections to RabbitMQ and MongoDB and generating triggers based on

action execution completion as well as doing action rescheduling.

In an HA deployment there must be a minimum of 2 replicas of st2notifier running, requiring a coordination backend,

which in our case is etcd.

st2sensorcontainer¶

st2sensorcontainer manages StackStorm sensors: starts, stops and restarts them as a subprocesses.

At the moment K8s configuration consists of Deployment with hardcoded 1 replica.

Future plans are to re-work this setup and benefit from Docker-friendly single-sensor-per-container mode #4179

(since st2 v2.9) as a way of Partitioning Sensors, distributing the computing load

between many pods and relying on K8s failover/reschedule mechanisms, instead of running everything on 1 single instance of st2sensorcontainer.

st2actionrunner¶

Stackstorm workers that actually execute actions.

5 replicas for K8s Deployment are configured by default to increase StackStorm ability to execute actions without excessive queuing.

Relies on etcd for coordination. This is likely the first thing to lift if you have a lot of actions

to execute per time period in your StackStorm cluster.

st2garbagecollector¶

Service that cleans up old executions and other operations data based on setup configurations.

Having 1 st2garbagecollector replica for K8s Deployment is enough, considering its periodic execution nature.

By default this process does nothing and needs to be configured in st2.conf settings (via values.yaml).

Purging stale data can significantly improve cluster abilities to perform faster and so it’s recommended to configure st2garbagecollector in production.

MongoDB HA ReplicaSet¶

StackStorm works with MongoDB as a database engine. External Helm Chart is used to configure MongoDB HA ReplicaSet.

By default 3 nodes (1 primary and 2 secondaries) of MongoDB are deployed via K8s StatefulSet.

For more advanced MongoDB configuration, refer to official mongodb-replicaset

Helm chart settings, which might be fine-tuned via values.yaml.

RabbitMQ HA Cluster¶

RabbitMQ is a message bus StackStorm relies on for inter-process communication and load distribution.

External Helm Chart is used to deploy RabbitMQ cluster in Highly Available mode.

By default 3 nodes of RabbitMQ are deployed via K8s StatefulSet.

For more advanced RabbitMQ configuration, please refer to official rabbitmq-ha

Helm chart repository, - all settings could be overridden via values.yaml.

etcd¶

StackStorm employs etcd as a distributed coordination backend, required for StackStorm cluster components to work properly in HA scenario.

Currently, due to low demands, only 1 instance of etcd is created via K8s Deployment.

Future plans to switch to official Helm chart and configure etcd/Raft cluster properly with 3 nodes by default (#8).

Docker registry¶

If you do not already have an appropriate docker registry for storing custom st2 packs images, we made it

very easy to deploy one in your k8s cluster. You can optionally enable in-cluster Docker registry via

values.yaml by setting docker-registry.enabled: true and additional 3rd party charts docker-registry

and kube-registry-proxy will be configured.

Feedback Needed!¶

As this deployment method new and beta is in progress, we ask you to try it and provide your feedback via

bug reports, ideas, feature or pull requests in StackStorm/stackstorm-enterprise-ha,

and ecourage discussions in Slack #docker channel or write us an email.

Questions? Problems? Suggestions? Engage!

- Support Forum

- Slack community channel: stackstorm-community.slack.com (Register here)

- Support: support@stackstorm.com